- Research article

- Open access

- Published:

Quality assessment with diverse studies (QuADS): an appraisal tool for methodological and reporting quality in systematic reviews of mixed- or multi-method studies

BMC Health Services Research volume 21, Article number: 144 (2021)

Abstract

Background

In the context of the volume of mixed- and multi-methods studies in health services research, the present study sought to develop an appraisal tool to determine the methodological and reporting quality of such studies when included in systematic reviews. Evaluative evidence regarding the design and use of our existing Quality Assessment Tool for Studies with Diverse Designs (QATSDD) was synthesised to enhance and refine it for application across health services research.

Methods

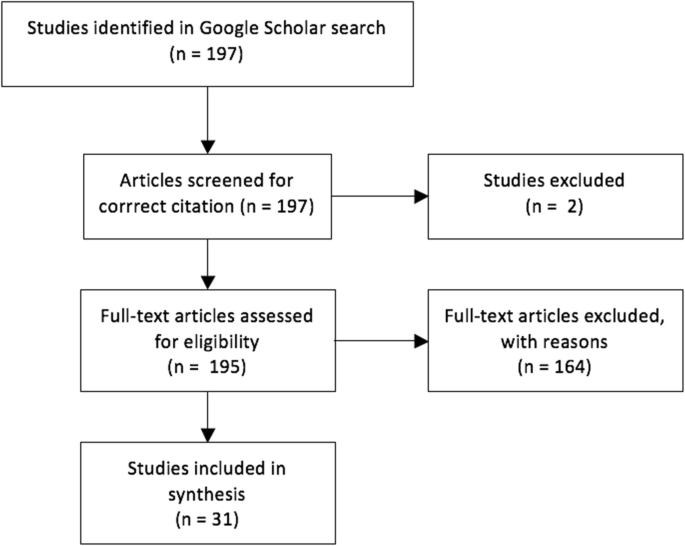

Secondary data were collected through a literature review of all articles identified using Google Scholar that had cited the QATSDD tool from its inception in 2012 to December 2019. First authors of all papers that had cited the QATSDD (n=197) were also invited to provide further evaluative data via a qualitative online survey. Evaluative findings from the survey and literature review were synthesised narratively and these data used to identify areas requiring refinement. The refined tool was subject to inter-rater reliability, face and content validity analyses.

Results

Key limitations of the QATSDD tool identified related to a lack of clarity regarding scope of use of the tool and in the ease of application of criteria beyond experimental psychological research. The Quality Appraisal for Diverse Studies (QuADS) tool emerged as a revised tool to address the limitations of the QATSDD. The QuADS tool demonstrated substantial inter-rater reliability (k=0.66), face and content validity for application in systematic reviews with mixed, or multi-methods health services research.

Conclusion

Our findings highlight the perceived value of appraisal tools to determine the methodological and reporting quality of studies in reviews that include heterogeneous studies. The QuADS tool demonstrates strong reliability and ease of use for application to multi or mixed-methods health services research.

What is known

-

Many tools exist for assessing the quality of studies in systematic reviews of either quantitative or qualitative work.

-

There is a paucity of tools that assess the quality of studies within systematic reviews that include a diverse group of study designs, and mixed or multi-methods studies in particular.

-

The Quality Assessment Tool for Studies with Diverse Designs (QATSDD) published in 2012 was developed to assess the quality of studies with heterogenous designs primarily for use in the discipline of Psychology.

What this study adds

-

The Quality Assessment for Diverse Studies (QuADS) tool is a refined version of the QATSDD tool. The aim was to use survey and literature review data to enhance the applicability of the tool to health services research, and more specifically, to multi or mixed-methods research.

-

The QuADS tool demonstrates substantial inter-rater reliability and content and face validity.

Background

The inclusion of diverse types of evidence, such as qualitative and mixed or multi-methods research, is well-established in systematic reviews of health services research [1,2,3]. This is important because these methods can address the complexities within healthcare that cannot often be readily measured through a single method. Qualitative methods, when used alone, offer explanatory power to enhance understanding of multi-faceted and complex phenomena such as experiences of healthcare and systems [3]. When partnered with quantitative methods, qualitative data can support and add depth of understanding [4, 5].

The appraisal of the methodological quality, evidence quality and quality of reporting of individual studies and of studies included in a review collectively is firmly established for reviews of quantitative studies. There are more than 60 tools currently available to assess the quality of randomised controlled trials alone [6]. Appraisal of the quality of evidence is often used to assess bias, particularly in randomised controlled trials. More recently, quality appraisal tools have extended to tools for appraising qualitative research, with the emergence of multiple tools in this space [7] creating a topic of extended debate [7,8,9,10]. As a result, reviews that include both qualitative and quantitative research often utilise separate quality appraisal tools for the quantitative and qualitative studies within the review, often citing the lack of a standard, empirically grounded tools suitable to assess methodological quality, evidence quality and/or quality of reporting with a variety of study designs [11]. The use of a parallel approach to all aspects of quality appraisal offers strength in the ability to acknowledge the unique nature of qualitative research and its epistemological distinction from quantitative approaches. Yet, a dual approach does not facilitate the appraisal of methodological, evidence or reporting quality for mixed-methods research, and creates challenges in appraising these aspects of multi-methods work.

Thus, acknowledging that the underlying assumptions of quantitative and qualitative research are substantially different, a tool to appraise methodological quality, evidence quality and/or quality of reporting mixed- or multi-methods research is valuable in enabling researchers to consider the transparency and reporting of key elements of these approaches [12]. Moreover, a tool that is relevant to mixed- and multi-method approaches is significant in the context of growing recognition of the value of these methodologies in health systems and services research [4]. A single tool that can be used to evaluate methodological quality, evidence quality, and quality of reporting across a body of diverse evidence facilitates reviewers to reflect on the extent to which there is apparent transparency and congruency in the research purpose and its reporting and the implications for evidence quality. This is currently not available for mixed- and multi-methods work, with study heterogeneity as a key obstacle to evidence appraisal. Given the complexities of multiple individuals evaluating a diverse set of studies, a supporting tool may also provide an underpinning method to develop a shared understanding of what constitutes quality in research methods, evidence and reporting.

The authors published in 2012, a pragmatic approach to facilitate reviewers to appraise the methodological quality, evidence quality, and quality of reporting in reviews that included qualitative, quantitative, mixed- and multi-methods research using a single tool (QATSDD) [13]. The QATSDD has been cited more than 270 times to date and has been used in more than 80 reviews. The tool provides a framework for exploring the congruency, transparency and organised reporting of the research process for research grounded in post-positivistic or positivist methodology that informs multiple-methods or mixed-methods designs. The tool was not proposed as a basis for determining studies to be excluded from a review given that any cut-off points to indicate high or low quality would be arbitrary.

The QATSDD tool was originally developed for application in Psychology but has demonstrated wider relevance through its application in a broad range of health services research. Its wide use suggests that researchers value the ability to appraise quality of evidence from studies that employ or combine a range of methods. Yet the QATSDD tool has some limitations in its ease of use beyond the discipline of Psychology. We therefore aimed to revise, enhance and adapt the current QATSDD tool into an updated version; Quality Assessment for Diverse Studies (QuADS), for greater applicability to health services researchers appraising quality of methods, evidence and reporting in multi- and/or mixed-methods research.

Methods

Data sources and procedures

Studies citing the QATSDD tool were identified using Google Scholar, citations imported to the reference-management software (Endnote X9.2) and duplicates removed. Full-text screening of the identified studies and discussion between two authors (BJ and RH) was used to identify studies that included qualitative evaluative data or commentary regarding the QATSDD tool to inform its enhancement. The following data were extracted: first author, year of publication, country, research discipline, study synopsis, QATSDD reliability and validity data and qualitative evaluative comments about the use of the tool. Alongside the review of citing studies, all authors who had used the QATSDD in a published, publicly accessible paper (101 authors) were contacted to provide an opportunity for them to provide any additional feedback through a qualitative brief online survey form. Ethical approval to administer the survey form was granted from the UNSW Human Research Ethics Committee (HC190645). The survey form contained two open-ended, free-text response items: 1) ‘When applying the QATSDD in your research, what were the strengths of the tool and what did this enable you to achieve?’ and 2) ‘When applying the QATSDD in your research, what were the limitations or challenges you experienced and how could these be addressed in a revised version of the tool?’ The survey was administered by one author (BJ) to the email addresses of the study authors via the Qualtrics online survey software, with one follow-up reminder. Consent was implied through completion and submission of the survey form.

Data analysis and synthesis

A narrative synthesis [14] was then undertaken with the heterogeneous data emerging from the literature review in addition to the qualitative comments provided by the survey respondents. In the development of the primary synthesis, two authors (BJ, RH) independently undertook a line-by-line review of each study and survey content. The evaluative comments were labelled and merged into a table of the items arising. The authors then met to discuss the commonly occurring items and created initial themes. In a further stage, an exploration of the relationships in the data and an assessment of the robustness of the synthesis product was explored. The initial themes were discussed and refined with two further authors (RL, PH) into final themes, which were tabulated. The research team then collectively discussed areas for clarification and areas requiring changes to be made. An iterative process of making refinements to the tool drawing upon the synthesised data was undertaken through collaboration, review of the tool and discussion between the author team.

Preliminary internal assessment and external evaluation

Face and content validity were also explored through providing the revised QuADS tool to 10 researchers who had expertise in reviewing studies with diverse designs within systematic reviews. The researchers worked across different disciplines (psychology, sociology, health services research, pharmacy) and methodologies (quantitative, qualitative and mixed-methods) relevant to health in the UK or Australia. Each researcher was provided the tool via email and asked to 1) provide their feedback on the perceived suitability of the items within the tool to their own field and methods of research and 2) report any items that require clarification for ease of use or readability. Their feedback was discussed between the authors and used to revise the tool iteratively through a series of minor amendments to wording and ordering or the tool items. The resulting QuADS tool was also subject to inter-rater reliability analysis between a psychologist, public health and health services researcher through application to 40 studies a recent systematic review with a kappa of 0.65 published by a colleague within our department who was external to this study [15].

Results

Results of the review

One hundred and ninety-seven citations were attributed to Sirriyeh et al’s (2012) [13] Reviewing studies with diverse designs: the development and evaluation of a new tool article and 31 of these studies met the inclusion criteria by including evaluative data or comments (Table 1). The study selection process is shown in Fig. 1. Of the 101 authors who had cited the QATSDD paper and reported using the tool in their publication; 13 did not receive emails, 10 had moved institutions or were on leave,, 74 did not provide any additional feedback and 1 researcher replied stating they had not been the individual that had used the tool. Three respondents provided survey feedback which were synthesised with and aligned the findings from the reviewed articles.

Excluded studies

Reasons for exclusion of studies were that 97 had cited the paper but made no further comments, 38 had cited the tool and provided rationale for its selection as the preferred tool but did not produce reliability and validity data, and 21 produced reliability and validity data that confirmed consistently the tool was reliable and valid across multiple contexts but made no qualitative comments. A further two papers were incorrectly attributed to the article on Google Scholar and eight were non-English papers.

Findings regarding the QATSDD tool

The synthesis revealed a number of perceived areas of strength of the tool including its strong reliability and validity [33, 55, 66]. All of the reviews within the 39 included articles that used the tool confirmed its reliability and validity. Further strength were the ability of QuADS to be applied when appraising diverse study designs [66, 95, 96], and its comprehensive list of indicators [97]. The breadth of disciplines in which this tool had been applied was notable: psychology, medicine, health sciences, allied health, and health services. The final group of included studies reflected the range of disciplines in which the tool had been applied. Authors who had employed the tool commented that it was valued for its inclusion of a wider range of important issues relating to research quality such as the involvement of end users in the research design and process, facilitating a comprehensive analysis [31, 95] Further, the synthesis also revealed opportunities to clarify and improve the tool, with one study [12] that conducted a substantial commentary piece on the QATSDD tool and its applications. Five key areas in which there were opportunities for enhancements or further clarification emerged. A number of revisions were made as part of the present study to the tool in order to address the findings from this study and described in relation to each of the findings below.

Scope and purpose of the tool

Further clarification on the scope of the use of the tool appeared to be necessary to distinguish its focus predominantly on reviews of mixed- or multi-method studies but also its purpose in providing an approach to assess the transparency and quality of study reporting. Instances in which the tool had been applied to exclude studies from a review were noted and this appeared to be due to the lack of detail regarding the purpose of the tool available to reviewers. Lack of clarity regarding the method for scoring using the QATSDD was apparent, with queries including whether weighting was required for particular criteria and the need for a cut-off to delineate high and low quality studies [12, 55, 91, 98]. Such queries indicate that the purpose of the tool to stimulate discussion regarding the quality and transparency of reporting in relation to each study may not be clear. There is no evidence to suggest that any criterion is more important than another or that a particular score is indicative of high or low quality; therefore, any cut-off would be arbitrary. The tool enables researchers to consider and discuss each element of the study in the context of its research aims and to explore the extent to which each quality criterion is met. This may then stimulate discussion of its relative importance in the context of their own review. A summary of the purpose of the tool and its scope is included in a new ‘User guide’ (supplementary file 1) that accompanies the tool.

Examples for each criterion

The desire for more examples to be used as part of the tool’s criteria was highlighted by Fenton et al. [12] and Lamore et al. [66]. These papers provided commentary that the use of more explicit examples from both a quantitative and qualitative perspective would assist users when scoring. These authors found the tool challenging when examples did not match the methods used in the papers they were reviewing and highlighted an opportunity to be more inclusive of a wider range of possible research methodologies when providing examples. Furthermore, the inclusion of additional examples may address challenges of distinguishing between scores. Limiting the responses to a dichotomous scale or 3-point scoring system was suggested in one commentary but a dichotomous scale does not provide sufficient response options for many items that are more complex than a yes or no, and three-point scales are recognised as leading to the overuse of neutral responses [12].

Theoretical and conceptual framework

A common challenge identified was in applying the notion of a ‘theoretical framework,’ particularly outside the discipline of Psychology [12, 91]. Fenton et al. [12] highlighted the need for additional guidance regarding the a definition of a theoretical framework and specifically, whether the inclusion of reference to theoretical concepts or assumptions was relevant to this criterion. It was notable from the included reviews that few studies scored highly on this criterion, providing a further indicator that this may require review. To resolve this, the criterion ‘Theoretical framework’ was revised to ‘Explicit consideration of theories or concepts that frame the study in the introduction,’ with relevant exemplars.

Quantitative bias, appropriate sampling and analytic methods

Fenton et al. indicated that the tool held a quantitative bias [12], suggesting that the wording and selection of examples may favour quantitative studies. Clausen et al. [33] also suggested that qualitative studies performed poorly using the tool. Criteria related to appropriate sampling and analytic methods appeared to be challenging to assess and it was decided to update these in the light of current perspectives on qualitative methodology, particularly regarding matters such as the need (or lack of) for data saturation. Explicit examples and language were added to each descriptor to balance recognition of both qualitative and quantitative research. Criteria concerning sample sizes was revised and reduced to ‘Appropriate sampling to address the research aim/s.’

Discussion

Quality appraisal is both a widely-debated and dynamic area with emerging opportunities but also increasing demands [98]. The findings of this research show that the QATSDD tool was utilised in a wide variety of health fields including psychology, allied health, medicine, public health, nursing, health services and social sciences, and that the tool demonstrated high reliability. Nevertheless, a range of minor limitations regarding the scope of use of the tool, balance between qualitative and quantitative ontologies and ease of use through examples also came to light. In the context of increasing mixed and multi-methods research in health services, this paper has described the development of the QuADS tool which is an augmentation of the QATSDD, and aims to be one of few pragmatic tools that will enable quality assessment across a diverse range of study designs [99]. QuADS provides a basis for research teams to reflect on methodological and evidence quality, in addition to establish limitations in the quality of reporting of studies. There is complementary scope for application of QuADS with other tools that focused on appraising the methodological quality to provide an expanded analysis where needed [100].

Increasing recognition of the value of employing mixed methods approaches in health services research to address complex healthcare questions is reflected in more than 10 quality assessment methods for mixed-methods work [101]. Such approaches have focused to the justification for and application of mixed methods in the study, considering approaches to study design and data synthesis. Current methods to explore quality in mixed-methods studies may not readily apply in the context of multi-methods work or a collection of heterogenous studies in a systematic review [101]. Given the multitude of quantitative or qualitative quality appraisal tools, a segregated approach is often taken to explore quality when reviews include heterogeneous studies which limits researcher ability to comment on the body of evidence collectively.

Four tools, including the QATSDD, have been developed to date to enable an integrated quality assessment [13, 102,103,104]. Two of the available tools provide a segregated analysis of the qualitative and quantitative elements of research studies rather than a single set of items applicable to explore both [102, 104]. The remaining tool provides a method to explore completeness of reporting of studies with mixed or multiple methods [103]. In the context of existing tools, the QuADS enables a brief, integrated assessment to be undertaken across a body of evidence within a review.

Limitations

This manuscript reports the first stage in revising a pragmatic tool that can be used to help guide reporting of research and to make assessments of the quality of non-trial based mixed- and multi-methods studies. Methodological, evidence and reporting quality are three important areas and each complex in their own right. Addressing all of these elements with a single tool is valuable for stimulating discussion and reflection between reviewers but provides a high-level analysis of these different quality domains. Ultimately the tool does not therefore provide a conclusive outcome regarding the quality of the research that can be used to make decisions regarding the inclusion or exclusion of studies from a review. Despite the inclusion of a wide range of literature utilising the QATSDD tool, the response rate of authors in the survey component of this work was very low which may have shaped the information provided. This project benefited from drawing upon the insights of those who had utilised the tool to shape the design of the revised tool, yet it is possible that those experienced difficulty in using the QATSDD tool ultimately did not include the tool in their outputs and were not readily identifiable for inclusion in this project. As a result, we may not have identified all of the areas for refinement required. Whilst the study panel were all experienced in reporting studies with diverse designs in multiple locations internationally, the panel process did not constitute a formal Delphi approach required to register QuADS tool in the Equator Network as a reporting guideline. This further process is an important further subsequent step that we seek to complete to improve the rigour and evidence base for the new tool.

Conclusion

Quality appraisal continues to be a critical component of systematic review. Increasing recognition of the value of multi- and mixed methods research to address complex health services research questions requires a tool such as QuADS, demonstrating good reliability and which allows researchers to appraise heterogenous studies in systematic review.

Availability of data and materials

Data may be requested by contacting the first author.

Change history

16 August 2021

The Additional File 2 was added to this article.

16 March 2021

A Correction to this paper has been published: https://doi.org/10.1186/s12913-021-06261-2

Abbreviations

- QATSDD:

-

Quality Assessment Tool for Studies with Diverse Designs

- QuADS:

-

Quality Assessment for Diverse Studies (QuADS) tool

References

Booth A. Searching for qualitative research for inclusion in systematic reviews: a structured methodological review. Syst Rev. 2016;5(1):74.

Dixon-Woods M, et al. How can systematic reviews incorporate qualitative research? A critical perspective. Qual Res. 2006;6(1):27–44.

Dixon-Woods M, Fitzpatrick R. Qualitative research in systematic reviews: has established a place for itself. Br Med J. 2001;23(7316):765–6.

Collins KM, Onwuegbuzie AJ, Sutton IL. A model incorporating the rationale and purpose for conducting mixed methods research in special education and beyond. Learn Disabil Contemp J. 2006;4(1):67–100.

Morse JM. Mixed method design: principles and procedures: Routledge; 2016.

Verhagen AP, et al. The art of quality assessment of RCTs included in systematic reviews. J Clin Epidemiol. 2001;54(7):651–4.

Dixon-Woods M, et al. The problem of appraising qualitative research. BMJ Qual Safety. 2004;13(3):223–5.

Carroll C, Booth A. Quality assessment of qualitative evidence for systematic review and synthesis: is it meaningful, and if so, how should it be performed? Res Synth Methods. 2015;6(2):149–54.

Dixon-Woods M, et al. Appraising qualitative research for inclusion in systematic reviews: a quantitative and qualitative comparison of three methods. J Health Serv Res Policy. 2007;12(1):42–7.

Hannes K, Macaitis K. A move to more systematic and transparent approaches in qualitative evidence synthesis: update on a review of published papers. Qual Res. 2012;12(4):402–42.

Kmet LM, Cook LS, Lee RC. Standard quality assessment criteria for evaluating primary research papers from a variety of fields; 2004.

Fenton L, Lauckner H, Gilbert R. The QATSDD critical appraisal tool: comments and critiques. J Eval Clin Pract. 2015;21(6):1125–8.

Sirriyeh R, et al. Reviewing studies with diverse designs: the development and evaluation of a new tool. J Eval Clin Pract. 2012;18(4):746–52.

Popay J, et al. Guidance on the conduct of narrative synthesis in systematic reviews. In: A product from the ESRC methods programme. United Kingdom: Lancaster University; 2006.

Chauhan A, et al. The safety of health care for ethnic minority patients: a systematic review. Int J Equity Health. 2020;19(1):1–25.

Abda A, Bolduc ME, Tsimicalis A, Rennick J, Vatcher D, Brossard-Racine M. Psychosocial outcomes of children and adolescents with severe congenital heart defect: a systematic review and meta-analysis. J Pediatr Psychol. 2019;44(4):463–77.

Adam A, Jensen JD. What is the effectiveness of obesity related interventions at retail grocery stores and supermarkets?—a systematic review. BMC Public Health. 2016;16(1):1–8.

Albutt AK, O'Hara JK, Conner MT, Fletcher SJ, Lawton RJ. Is there a role for patients and their relatives in escalating clinical deterioration in hospital? A systematic review. Health Expect. 2017;20(5):818–25.

Alsawy S, Mansell W, McEvoy P, Tai S. What is good communication for people living with dementia? A mixed-methods systematic review. Int Psychogeriatr. 2017;29(11):1785–800.

Arbour-Nicitopoulos KP, Grassmann V, Orr K, McPherson AC, Faulkner GE, Wright FVA. Scoping review of inclusive out-of-school time physical activity programs for children and youth with physical disabilities. Adapt Phys Act Q. 2018;35(1):111–38.

Augestad LB. Self-concept and self-esteem among children and young adults with visual impairment: a systematic review. Cogent Psychol. 2017;4.

Augestad LB. Mental health among children and young adults with visual impairments: a systematic review. J Vis Impairment Blindness. 2017;111(5):411–25.

Aztlan-James EA, McLemore M, Taylor D. Multiple unintended pregnancies in US women: a systematic review. Womens Health Issues. 2017;27(4):407–13.

Band R, Wearden A, Barrowclough C. Patient outcomes in association with significant other responses to chronic fatigue syndrome: a systematic review of the literature. Clin Psychol Sci Pract. 2015;22(1):29–46.

Batten G, Oakes PM, Alexander T. Factors associated with social interactions between deaf children and their hearing peers: a systematic literature review. J Deaf Stud Deaf Educ. 2014;19(3):285–302.

Baxter R, Taylor N, Kellar I, Lawton R. What methods are used to apply positive deviance within healthcare organisations? A systematic review. BMJ Qual Saf. 2016;25(3):190–201.

Blackwell JE, Alammar HA, Weighall AR, Kellar I, Nash HM. A systematic review of cognitive function and psychosocial well-being in school-age children with narcolepsy. Sleep Med Rev. 2017;34:82–93.

Blake DF, Crowe M, Mitchell SJ, Aitken P, Pollock NW. Vibration and bubbles: a systematic review of the effects of helicopter retrieval on injured divers. Diving Hyperb Med. 2018;48(4):241.

Bradford N, Chambers S, Hudson A, et al. Evaluation frameworks in health services: an integrative review of use, attributes and elements. J Clin Nurs. 2019;28(13–14):2486–98.

Braun SE, Kinser PA, Rybarczyk B. Can mindfulness in health care professionals improve patient care? An integrative review and proposed model. Transl Behav Med. 2019;9(2):187–201.

Burton A, et al. How effective are mindfulness-based interventions for reducing stress among healthcare professionals? A systematic review and meta-analysis. Stress Health. 2017;33(1):3–13.

Carrara A, Schulz PJ. The role of health literacy in predicting adherence to nutritional recommendations: a systematic review. Patient Educ Couns. 2018;101(1):16–24.

Clausen C, Cummins K, Dionne K. Educational interventions to enhance competencies for interprofessional collaboration among nurse and physician managers: an integrative review. J Interprof Care. 2017;31(6):685–95.

Connolly F, Byrne D, Lydon S, Walsh C, O’Connor P. Barriers and facilitators related to the implementation of a physiological track and trigger system: a systematic review of the qualitative evidence. Int J Qual Health Care. 2017;29(8):973–80.

Curran C, Lydon S, Kelly M, Murphy A, Walsh C, O’Connor P. A systematic review of primary care safety climate survey instruments: their origins, psychometric properties, quality, and usage. J Patient Saf 2018;14(2):e9–18.

Deming A, Jennings JL. The absence of evidence-based practices (EBPs) in the treatment of sexual abusers: recommendations for moving toward the use of a true EBP model. Sex Abus. 2019:1079063219843897.

Dias CC, Rodrigues PP, da Costa-Pereira A, Magro F. Clinical prognostic factors for disabling Crohn's disease: a systematic review and meta-analysis. World J Gastroenterol: WJG. 2013;19(24):3866.

Emerson LM, Leyland A, Hudson K, Rowse G, Hanley P, Hugh-Jones S. Teaching mindfulness to teachers: a systematic review and narrative synthesis. Mindfulness. 2017;8(5):1136–49.

Fenton L, White C, Gallant K, Hutchinson S, Hamilton-Hinch B, Gilbert R, Lauckner H. The benefits of recreation for the recovery and social inclusion of individuals with mental health challenges: An integrative review.

Filmer T, Herbig B. Effectiveness of interventions teaching cross-cultural competencies to health-related professionals with work experience: a systematic review. J Contin Educ Health Prof. 2018;38(3):213–21.

Fylan B. Medicines management after hospital discharge: patients’ personal and professional networks (Doctoral dissertation, University of Bradford).

Graham-Clarke E, Rushton A, Noblet T, Marriott J. Facilitators and barriers to non-medical prescribing–a systematic review and thematic synthesis. PLoS One. 2018;13(4):e0196471.

Gillham R, Wittkowski A. Outcomes for women admitted to a mother and baby unit: a systematic review. Int J Women’s Health. 2015;7:459.

Gkika S, Wittkowski A, Wells A. Social cognition and metacognition in social anxiety: a systematic review. Clin Psychol Psychother. 2018;25(1):10–30.

Hardy M, Johnson L, Sharples R, Boynes S, Irving D. Does radiography advanced practice improve patient outcomes and health service quality? A systematic review. Br J Radiol. 2016;89(1062):20151066.

Harris K, Band RJ, Cooper H, Macintyre VG, Mejia A, Wearden AJ. Distress in significant others of patients with chronic fatigue syndrome: a systematic review of the literature. Br J Health Psychol. 2016;21(4):881–93.

Harrison R, Cohen AW, Walton M. Patient safety and quality of care in developing countries in Southeast Asia: a systematic literature review. Int J Qual Health Care. 2015;27(4):240–54.

Harrison R, Walton M, Manias E, Smith-Merry J, Kelly P, Iedema R, Robinson L. The missing evidence: a systematic review of patients’ experiences of adverse events in health care. Int J Qual Health Care. 2015;27(6):424–42.

Harrison R, Birks Y, Hall J, Bosanquet K, Harden M, Iedema R. The contribution of nurses to incident disclosure: a narrative review. Int J Nurs Stud. 2014;51(2):334–45.

Hawkins RD. Psychological factors underpinning child-animal relationships and preventing animal cruelty (Doctoral dissertation, University of Edinburgh).

Heath G, Montgomery H, Eyre C, Cummins C, Pattison H, Shaw R. Developing a tool to support communication of parental concerns when a child is in hospital. InHealthcare. 2016;4(1):9 Multidisciplinary Digital Publishing Institute.

Hesselstrand M, Samuelsson K, Liedberg G. Occupational therapy interventions in chronic pain–a systematic review. Occup Ther Int. 2015;22(4):183–94.

Hill S, Adams J, Hislop J. Conducting contingent valuation studies in older and young populations: a rapid review. UK: Institute of Health and Society, Newcastle University; 2015.

Holl M, van den Dries L, Wolf JR. Interventions to prevent tenant evictions: a systematic review. Health Soc Care Community,. 2016;24(5):532–546.

Iddon J, Dickson J, Unwin J. Positive psychological interventions and chronic non-cancer pain: a systematic review of the literature. Int J Appl Positive Psychol. 2016;1:1–25.

Jaarsma EA, Smith B. Promoting physical activity for disabled people who are ready to become physically active: a systematic review. Psychol Sport Exerc. 2018;37:205–23.

Jackman PC, Hawkins RM, Crust L, Swann C. Flow states in exercise: a systematic review. Psychol Sport Exerc. 2019;45:101546.

Jackson-Blott K, Hare D, Davies B, Morgan S. Recovery-oriented training programmes for mental health professionals: a narrative literature review. Ment Health Prev. 2019;13:113–27.

Johnson D, Horton E, Mulcahy R, Foth M. Gamification and serious games within the domain of domestic energy consumption: a systematic review. Renew Sust Energ Rev. 2017;73:249–64.

Jones N, Bartlett H. The impact of visual impairment on nutritional status: a systematic review. Br J Vis Impair. 2018;36(1):17–30.

Khajehaminian MR, Ardalan A, Keshtkar A, et al. A systematic literature review of criteria and models for casualty distribution in trauma related mass casualty incidents. Injury. 2018;49(11):1959–68.

Klingenberg O, Holkesvik AH, Augestad LB. Digital learning in mathematics for students with severe visual impairment: a systematic review. Br J Vis Impair. 2019;00(0).

Kolbe AR. ‘It’s not a gift when it comes with price’: a qualitative study of transactional sex between UN peacekeepers and Haitian citizens. Stability Int J Secur Dev. 2015.

Kumar MB, Wesche S, McGuire C. Trends in metis-related health research (1980–2009): identification of research gaps. Can J Public Health. 2012;103(1):23–8.

Lambe KA, Lydon S, Madden C, et al. Hand hygiene compliance in the ICU: a systematic review. Crit Care Med. 2019;47(9):1251–7.

Lamore K, Montalescot L, Untas A. Treatment decision-making in chronic diseases: what are the family members’ roles, needs and attitudes? A systematic review. Patient Educ Couns. 2017;100(12):2172–81.

Levy I, Attias S, Ben-Arye E, Bloch B, Schiff E. Complementary medicine for treatment of agitation and delirium in older persons: a systematic review and narrative synthesis. Int J Geriatr Psychiatry. 2017;32(5):492–508.

Madden C, Lydon S, Curran C, Murphy AW, O’Connor P. Potential value of patient record review to assess and improve patient safety in general practice: a systematic review. Eur J Gen Pract. 2018;24(1):192–201.

Martins-Junior PA. Dental treatment under general anaesthetic and children’s oral health-related quality of life. Evid Based Dent. 2017;18(3):68–9.

McClelland G, Rodgers H, Flynn D, Price CI. The frequency, characteristics and aetiology of stroke mimic presentations: a narrative review. Eur J Emerg Med. 2019;26(1):2–8.

McPherson AC, Hamilton J, Kingsnorth S, et al. Communicating with children and families about obesity and weight-related topics: a scoping review of best practices. Obes Rev. 2017;18(2):164–82.

Medford E, Hare DJ, Wittkowski A. Demographic and psychosocial influences on treatment adherence for children and adolescents with PKU: a systematic review. JIMD Rep. 2017;39:107–16.

Medway M, Rhodes P. Young people’s experience of family therapy for anorexia nervosa: a qualitative meta-synthesis. Adv Eat Disord. 2016;4(2):189–207.

Miller L, Alele FO, Emeto TI, Franklin RC. Epidemiology, risk factors and measures for preventing drowning in Africa: a systematic review. Medicina. 2019;55(10).

Mimmo L, Harrison R, Hinchcliff R. Patient safety vulnerabilities for children with intellectual disability in hospital: a systematic review and narrative synthesis. BMJ Paediatr Open. 2018;2(1).

Nghiem T, Louli J, Treherne SC, Anderson CE, Tsimicalis A, Lalloo C, Stinson JN, Thorstad K. Pain experiences of children and adolescents with osteogenesis imperfecta. Clin J Pain. 2017;33(3):271–80.

Nghiem T, Chougui K, Michalovic A, Lalloo C, Stinson J, Lafrance ME, Palomo T, Dahan-Oliel N, Tsimicalis A. Pain experiences of adults with osteogenesis imperfecta: an integrative review. Can J Pain. 2018;2(1):9–20.

Noblet T, Marriott J, Graham-Clarke E, Rushton A. Barriers to and facilitators of independent non-medical prescribing in clinical practice: a mixed-methods systematic review. J Phys. 2017;63(4):221–34.

O'Dowd E, Lydon S, O'Connor P, Madden C, Byrne D. A systematic review of 7 years of research on entrustable professional activities in graduate medical education, 2011-2018. Med Educ. 2019;53(3):234–49.

Orr K, Wright FV, Grassmann V, McPherson AC, Faulkner GE, Arbour-Nicitopoulos KP. Children and youth with impairments in social skills and cognition in out-of-school time inclusive physical activity programs: a scoping review. Int J Dev Disabil. 2019.

Pini S, Hugh-Jones S, Gardner PH. What effect does a cancer diagnosis have on the educational engagement and school life of teenagers? A systematic review. Psycho-Oncology. 2012;21(7):685–94.

Powney M. Attachment and trauma in people with intellectual disabilities. United Kingdom: The University of Manchester; 2014.

Quinn C, Toms G. Influence of positive aspects of dementia caregiving on caregivers’ well-being: a systematic review. The Gerontologist. 2018.

Rosella L, Bowman C, Pach B, Morgan S, Fitzpatrick T, Goel V. The development and validation of a meta-tool for quality appraisal of public health evidence: meta quality appraisal tool (MetaQAT). Public Health. 2016;136:57–65.

Salman Popattia A, Winch S, La Caze A. Ethical responsibilities of pharmacists when selling complementary medicines: a systematic review. Int J Pharm Pract. 2018;26(2):93–103.

Sibley A. Nurse prescribers’ exploration of diabetes patients’ beliefs about their medicines (Doctoral dissertation, University of Southampton).

Ten Hoorn S, Elbers PW, Girbes AR, Tuinman PR. Communicating with conscious and mechanically ventilated critically ill patients: a systematic review. Crit Care. 2016;20(1):1–4.

Tomlin M. Patients at the centre of design to improve the quality of care; exploring the experience-based co-design approach within the NHS: [Doctor of Philosophy]: School of Psychology, The University of Leeds; 2018.

Tuominen O, Lundgrén-Laine H, Flinkman M, Boucht S, Salanterä S. Rescheduling nursing staff with information technology-based staffing solutions: a scoping review. Int J Healthc Technol Manag. 2018;17(2–3):145–67.

Vyth EL, Steenhuis IH, Brandt HE, Roodenburg AJ, Brug J, Seidell JC. Methodological quality of front-of-pack labeling studies: a review plus identification of research challenges. Nutr Rev. 2012;70(12):709–20.

Wallace A, et al. Traumatic dental injury research: on children or with children? Dent Traumatol. 2017;33(3):153–9.

Walton M, Harrison R, Burgess A, Foster K. Workplace training for senior trainees: a systematic review and narrative synthesis of current approaches to promote patient safety. Postgrad Med J. 2015;91(1080):579–87.

Wells E. The role of parenting interventions in promoting treatment adherence in cystic fibrosis. United Kingdom: The University of Manchester; 2016.

Wright CJ. Likes, dislikes, must-haves, and must-nots: an exploratory study into the housing preferences of adults with neurological disability: School of Human Services and Social Work, Griffith University; 2017.

Arbour-Nicitopoulos KP, et al. A scoping review of inclusive out-of-school time physical activity programs for children and youth with physical disabilities. Adapt Phys Act Q. 2018;35(1):111–38.

Noblet T, et al. Barriers to and facilitators of independent non-medical prescribing in clinical practice: a mixed-methods systematic review. J Physiother. 2017;63(4):221–34.

Tomlin M. Patients at the centre of design to improve the quality of care; exploring the experience-based co-design approach within the NHS, in School of Psychology. Leeds: The University of Leeds; 2018.

Harrison JK, et al. Using quality assessment tools to critically appraise ageing research: a guide for clinicians. Age Ageing. 2017;46(3):359–65.

Hong QN, et al. Improving the content validity of the mixed methods appraisal tool: a modified e-Delphi study. J Clin Epidemiol. 2019;111:49–59.

Hong QN, Sergic F, Bartlett G, Boardman F, Cargo M, Dagenais P, Gagnon MP, Griffiths F, Nicolau B, O’Cathain A, Rousseau M-C, Vedel I, Pluye P. The mixed methods appraisal tool (MMAT) version 2018 for information professionals and researchers. Educ Inf. 2018;34(4):285–91.

Curry L, NSM. Mixed methods in health sciences research: a practical primer. Thousand Oaks: SAGE Publications, Inc.; 2015.

AFL. Evaluative tool for mixed method studies. In: Schools of healthcare. Leeds: University of Leeds; 2005.

Crowe M, Sheppard L, Campbell A. Reliability analysis for a proposed critical appraisal tool demonstrated value for diverse research designs. J Clin Epidemiol. 2012;65(4):375–83.

Pace R, et al. Testing the reliability and efficiency of the pilot mixed methods appraisal tool (MMAT) for systematic mixed studies review. Int J Nurs Stud. 2012;49(1):47–53.

Acknowledgements

We would like to thank the academics who contributed feedback on the proposed changes and revised tool.

Funding

This is unfunded research.

Author information

Authors and Affiliations

Contributions

RH conceived the study and RH, RL and PG collectively developed the research design. BJ completed data collection and analysis. RH and BJ developed initial revisions to the QATSDD tool. All authors collectively finalised the QuADS tool and user guide. All authors contributed to the development of the manuscript and reviewed and approved the final submission.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval was granted by the UNSW Health Research Ethics Committee. Consent was provided by participants through a tick box when returning the survey.

Consent for publication

Not applicable.

Competing interests

Associate Professor Reema Harrison is an Associate Editor for BMC Health Services Research.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: “The author Peter Gardner’s name has been corrected.”

Supplementary Information

Additional file 2.

QuADS Criteria.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Harrison, R., Jones, B., Gardner, P. et al. Quality assessment with diverse studies (QuADS): an appraisal tool for methodological and reporting quality in systematic reviews of mixed- or multi-method studies. BMC Health Serv Res 21, 144 (2021). https://doi.org/10.1186/s12913-021-06122-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-021-06122-y